December 28th, 2018

Stream the dream – improving Lookback’s video infrastructure and Live player

Reliable, high-quality video has been a fundamental part of Lookback’s platform since the very beginning. We introduced our Live feature in 2016, empowering customers to do remote moderated research ever since. Live lets you broadcast to the rest of your team – wherever they are – with participants from around the globe or right from your workplace.

With that said, serving and streaming video is … hard. Ensuring great audio-video quality and reliable connectivity (especially for corporate firewalls) has proven to be tricky.

That’s why we’re incredibly happy and proud to let you know about our two latest advances in video infrastructure:

- Live streaming servers all over the world

- On-demand video serving to the player

…as well as an extra treat! Let’s begin!

1. Live streaming servers all over the world

Our Live server, code named Dormammu, is our in-house technology for managing the audio and video for Live streaming. The server is effectively a selective forwarding unit that also records all video in the cloud as you’re streaming, ready to play back to you later when you re-watch your session (more on this later).

Our Live server in a nutshell:

- When you start a new Live session, the Live server is the core piece that makes sure that all parties are connected to each other.

- Uses the WebRTC protocol.

- Records the participant audio and video in a session, as well as the moderator audio.

- Supported by iOS, Android, and desktop.

When doing real-time video streaming, latency is a parameter you have to take into account – the geographical distance to the server is important, and something we’ve measured in real world scenarios. Our server has been living in a single physical location: on the green hills of Ireland. That means all traffic has to go all the way to Ireland and back again.

No more. From now on, we have Live servers distributed all over the world.

What does this mean?

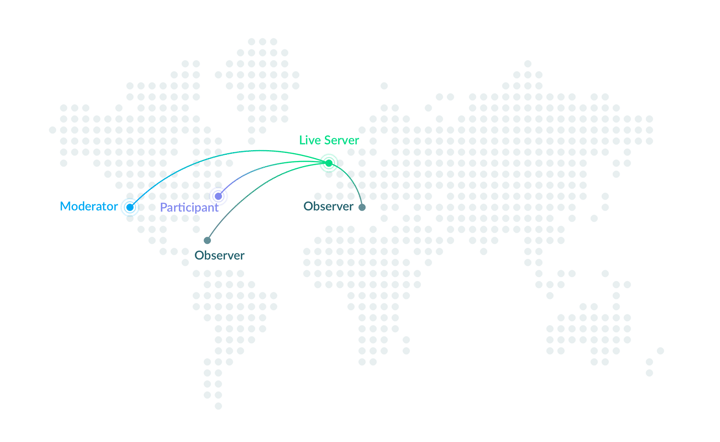

The Old Way

Let’s say you’re setting up a remote moderated session with the moderator in San Francisco, the participant in New York, and two coworkers observing from Florida and Greece, respectively. This is how the traffic used to go:

Everyone’s audio and video travels to and from one server location. This means that even if the moderator and participant are relatively close to each other, the network traffic still has to go over the Atlantic and back again. Even though we’ve got fast internets in 2018, that’s a physical trip even the fastest bits and bytes can’t do fast enough!

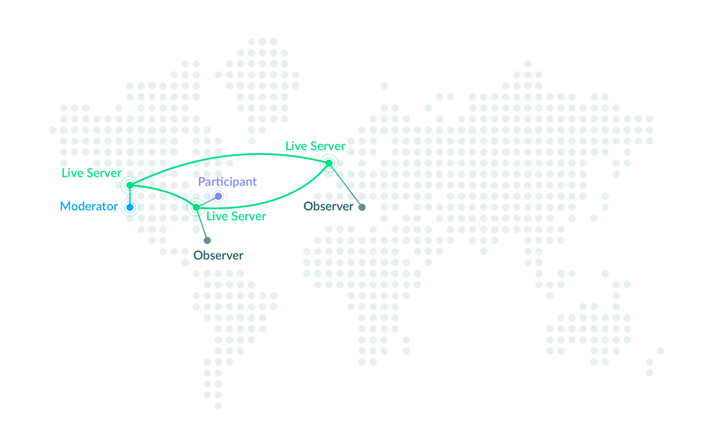

The New Way

In our improved solution, it works like this instead:

Now the audio and video traffic will always go to the closest Live server location instead of making the jump over the Atlantic ocean. This is a huge improvement in audio/video quality for moderators, participants, and observers alike.

Our new Live servers are currently being rolled out to locations in North and South America, western and central Europe, and to South East Asia.

2. On-demand video streaming for recordings

Our second improvement is all about the complementary part of the research experience: watching the recorded material. You can think of live streaming and on-demand video streaming like the difference between a Skype video call and a Netflix session. With Skype you call in real-time, but with Netflix you watch already recorded material. We do both!

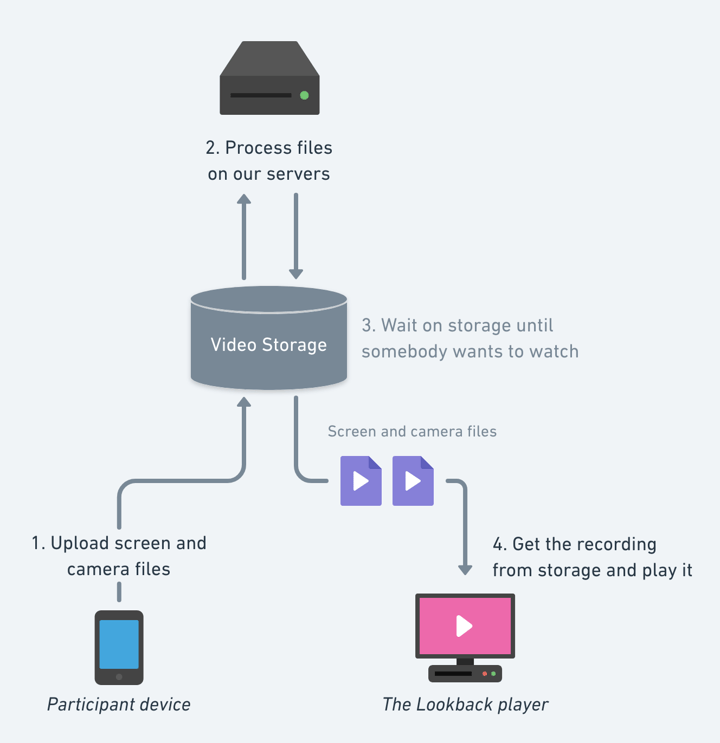

The Old Way

We used to upload recorded sessions to our servers, and immediately started processing the raw material into video made for fast playback. Something like this:

This was sub-optimal for a number of reasons:

- We needed to process all recordings (2) before they were ready to watch. This meant customers had to wait.

- The processed recordings (4) were rendered in a single format and quality. There was no way to vary the video quality, i.e. serve lower quality if the customer’s connection was bad.

- If the test participant used their face camera, we had to serve two video files to the Lookback player (4). Playing two video files at the same time yields notoriously bad results both in playback quality and syncing. We could never ensure perfectly synced audio and video because of how video works in browsers.

- We had to keep servers up and running for processing recordings (2). This is a burden for us to maintain, as well as a waste of money. We also had to store processed recordings that might’ve never been watched in our video storage (3). This is waste of space.

We obviously really care about the watching part of the research experience. That’s why we set out to create something better.

The New Way

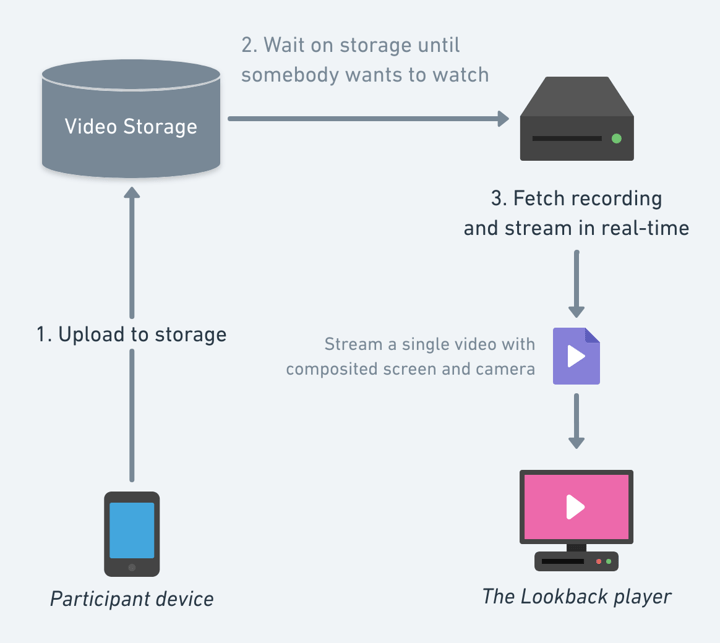

In order to move to a Netflix-style solution, we built a new video streaming server to grab raw files from storage and serve them on-demand to the Lookback player. The big feat here is that the video is transcoded and composited on the fly and served as one. “Composited” means that we merge the screen and face camera video together in real time and stream to the player, eliminating the need to playback two videos simultaneously – no more sync issues!

It works like this:

Fewer steps – simplicity!

Notable features:

- A single video stream is delivered, instead of serving two (one for the test participant’s screen, one for the face camera).

- We skip the processing step and give you video immediately on demand, without delay.

- We can adaptively change the quality of the video on demand, even in the middle of the recording. If you change from spotty 3G to speedy WiFi, video quality immediately goes up. This is possible with the use of HLS (HTTP Live Streaming).

- Since the video is streamed, there’s no need for the browser to download the full recording from our storage when playing. Only download the bytes as they’re needed.

This video serving solution is currently being rolled out across the participant platforms, starting with desktop recordings.

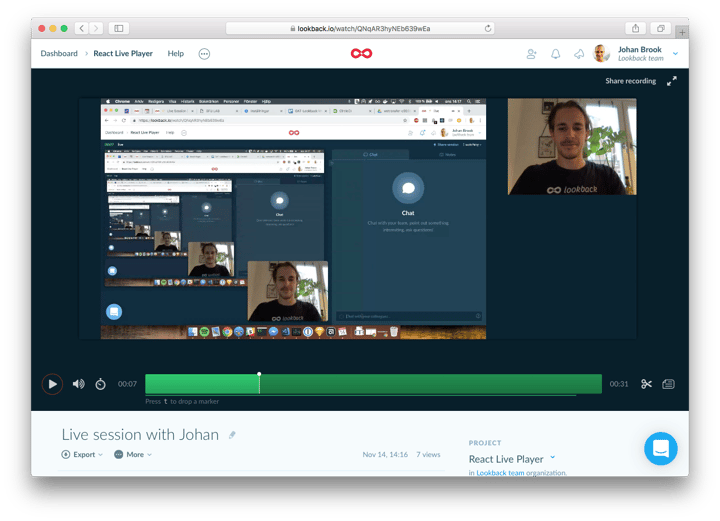

One more thing…

We have one last thing to show you. In order to make use of the distributed nature of our new Live servers described above, we’ve completely reworked our Live player under the hood. The new version looks (almost) the same as the previous one, save for a few visual updates. The new features include:

- Moderators can now select which camera and microphone to be used when calling a participant.

- Moderators and observers can resize the sidebar, or hide it completely.

But the big deal is under the hood:

- The Live player is much more reliable than before. And faster to start up ⚡

- It uses our new Live server backend infrastructure to deliver high quality audio and video across continents.

- We’ve polished the user experience in the player, and made it better at handling unexpected errors with web cameras and microphones.

We hope you’ll like it! It was very fun to re-build.

During the past months, we’ve been going all in on reliability in our platform. That includes huge projects like the ones brought up in this post, as well as doubling down on fixing all serious issues we discover or our customers report. Working with video is sometimes hard, but we strive on simplifying and improving. We hope that this will make your research experience more reliable, so you can go build things people love and understand ✨

Happy researching! 🤓